Meta’s AI research team, known as FAIR (Fundamental AI Research), is pushing robotics forward by developing new technologies that allow robots to “feel,” move competently, and communicate with people. These improvements aim to create robots that are not only technically adept but also can do real-world jobs naturally and pleasantly for humans. Here’s a quick overview of what they’ve announced and why it matters.

Consider the most ordinary human acts, such as picking up a cup of coffee, stacking dishes, and shaking hands. All of these need a sense of touch and precise control, which we take for granted. Robots face challenges in gaining these abilities. They usually rely on visual or programmed instructions.

Meta’s latest tools assist robots in overcoming these limits. By providing robots with a sense of “touch” via improved sensors and systems, these technologies may enable robots to do tasks with the same sensitivity and adaptability as humans. This might offer up a plethora of opportunities for robots in industries such as healthcare, manufacturing, and virtual reality.

1. Meta Sparsh:

Sparsh is a touch-sensing technology that enables AI to detect textures, pressure, and movement beyond sight. Sparsh, unlike many AI systems, uses raw data, which makes it more adaptive and accurate across a wide range of jobs.

2. Meta Digit 360:

An advanced artificial fingertip with human-like touch sensibility. It can detect microscopic texture changes and pressures, capturing touch details akin to a human finger. It has a strong lens that covers the entire fingertip, allowing it to “see” in all directions and react to temperature changes. This makes it ideal for sensitive jobs like medical operations and complex virtual interactions.

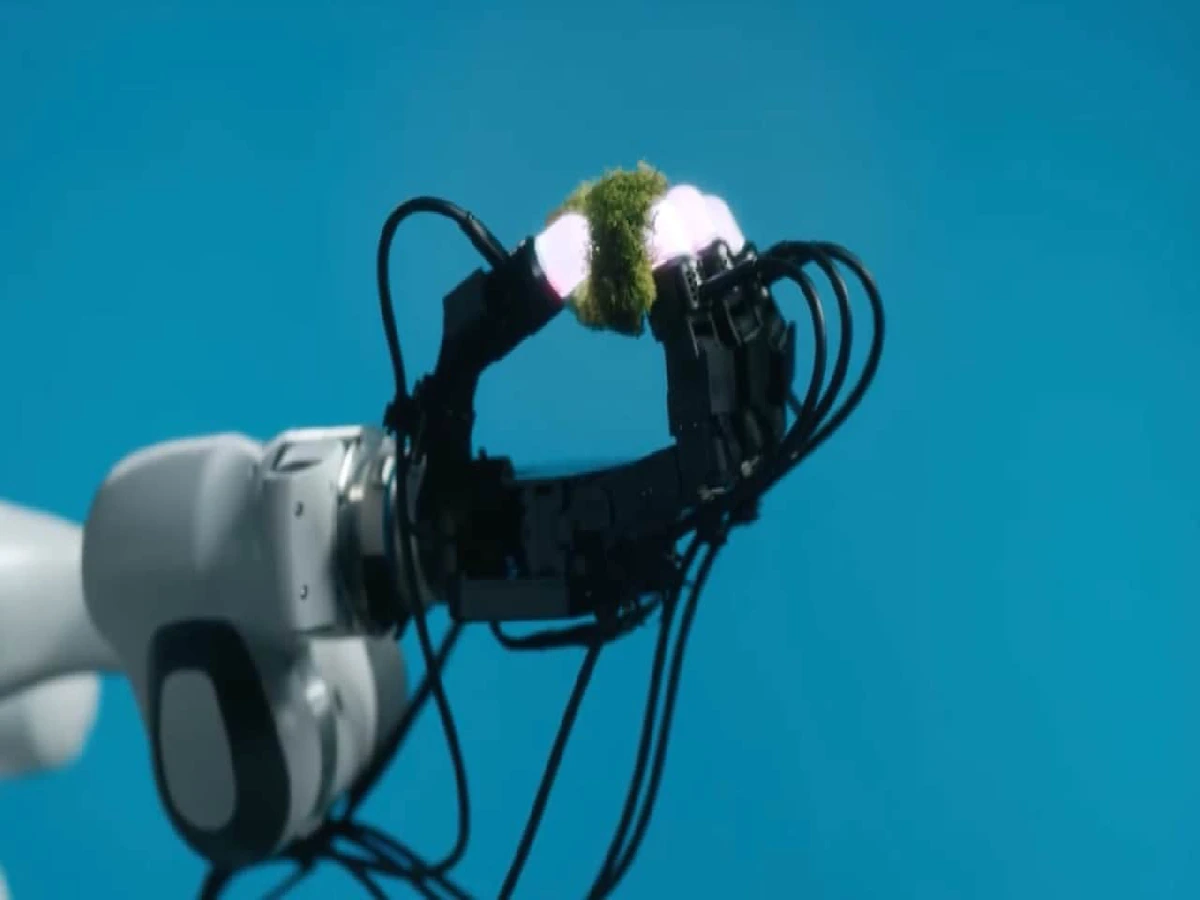

3. Meta-Digital Plexus:

Plexus is a system that connects several touch sensors to a robotic hand, providing a sense of touch from fingers to palms. This makes it easier to produce robotic hands that can move with the fine-tuned control that humans have, assisting researchers in building robots.